About Me

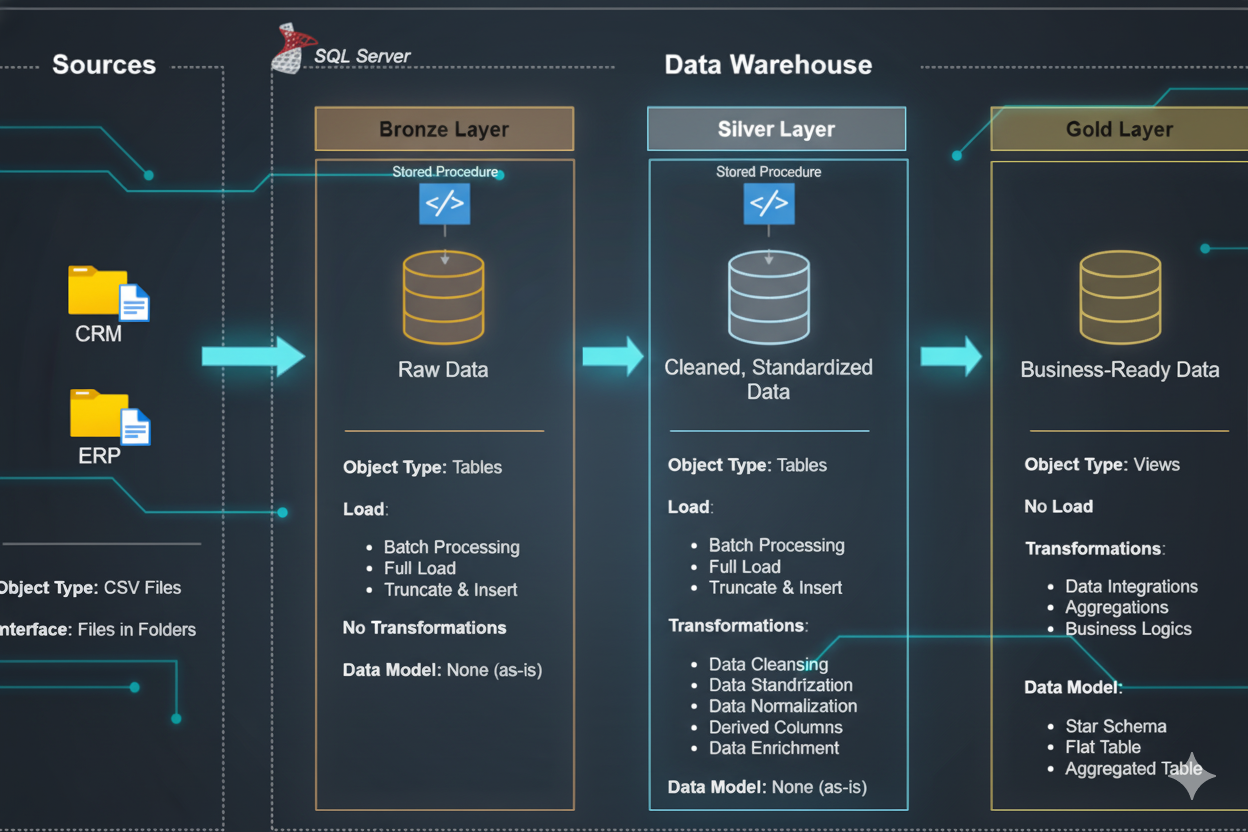

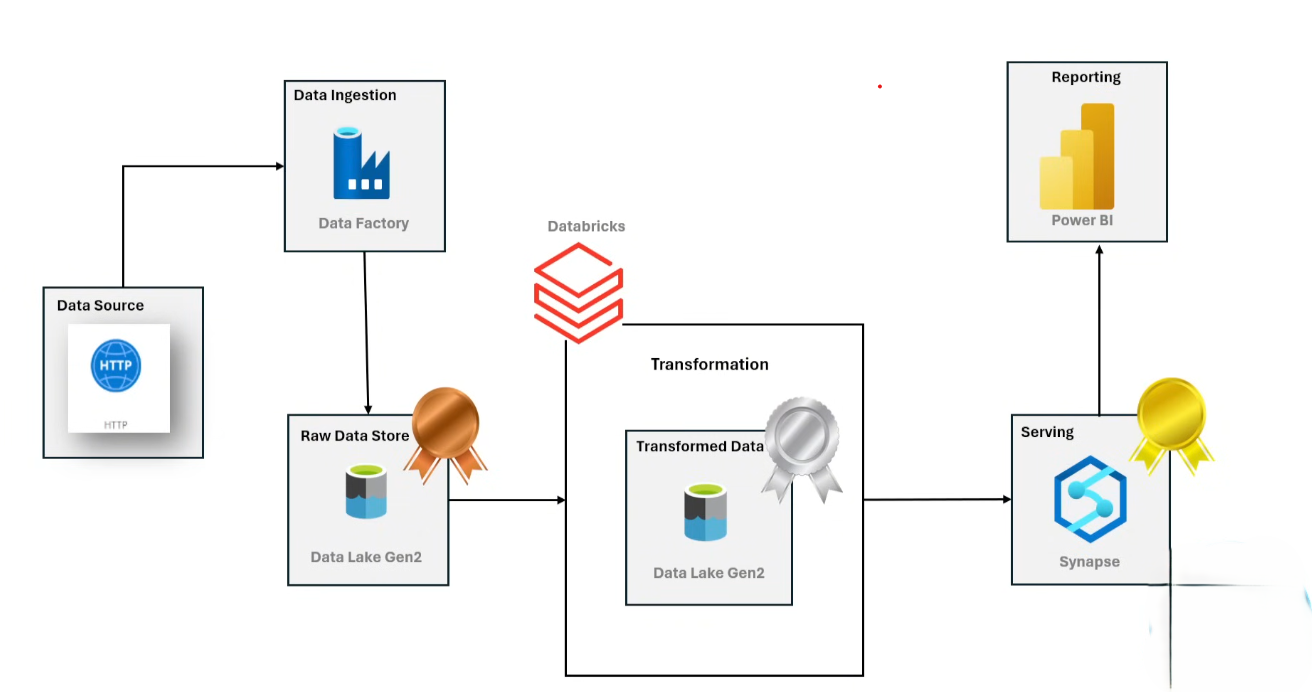

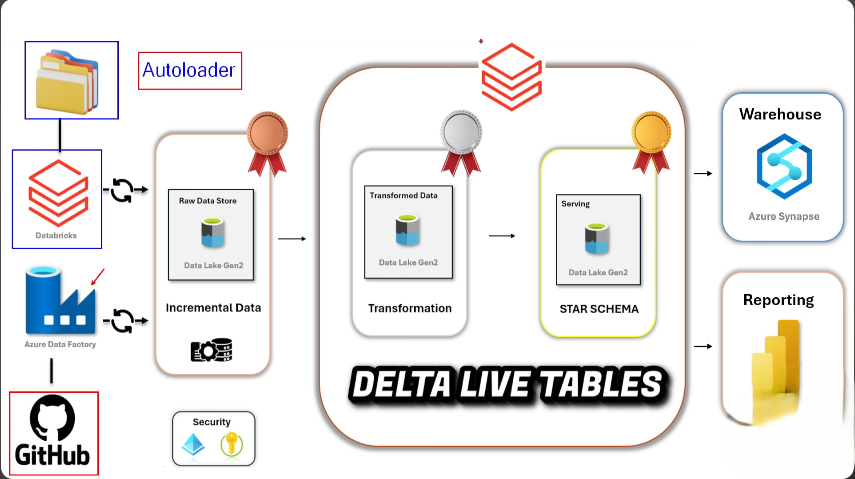

I build data systems that are dependable, observable, and easy to scale. My work spans medallion architectures, real-time streaming, and batch pipelines that move data from raw sources to analytics-ready layers.

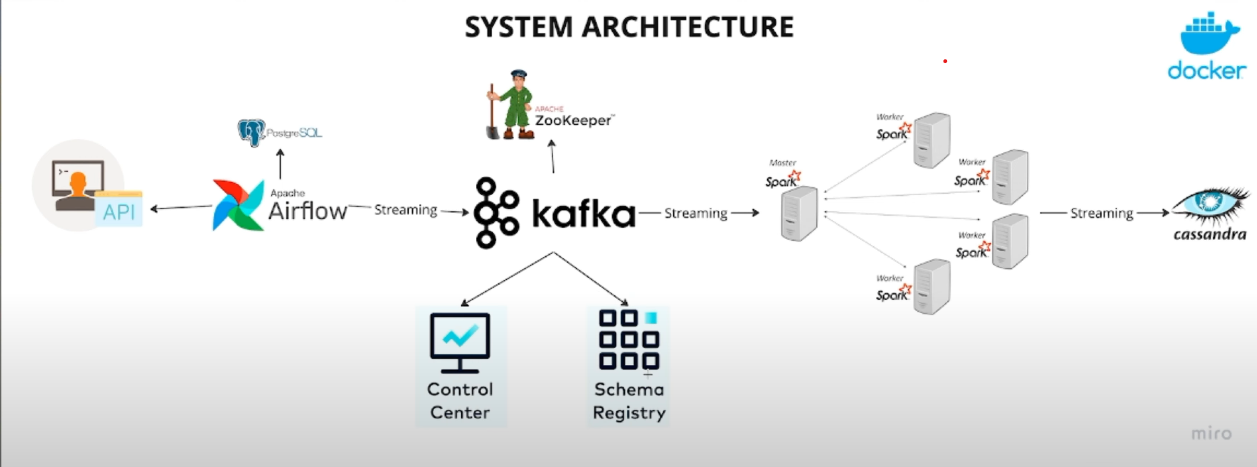

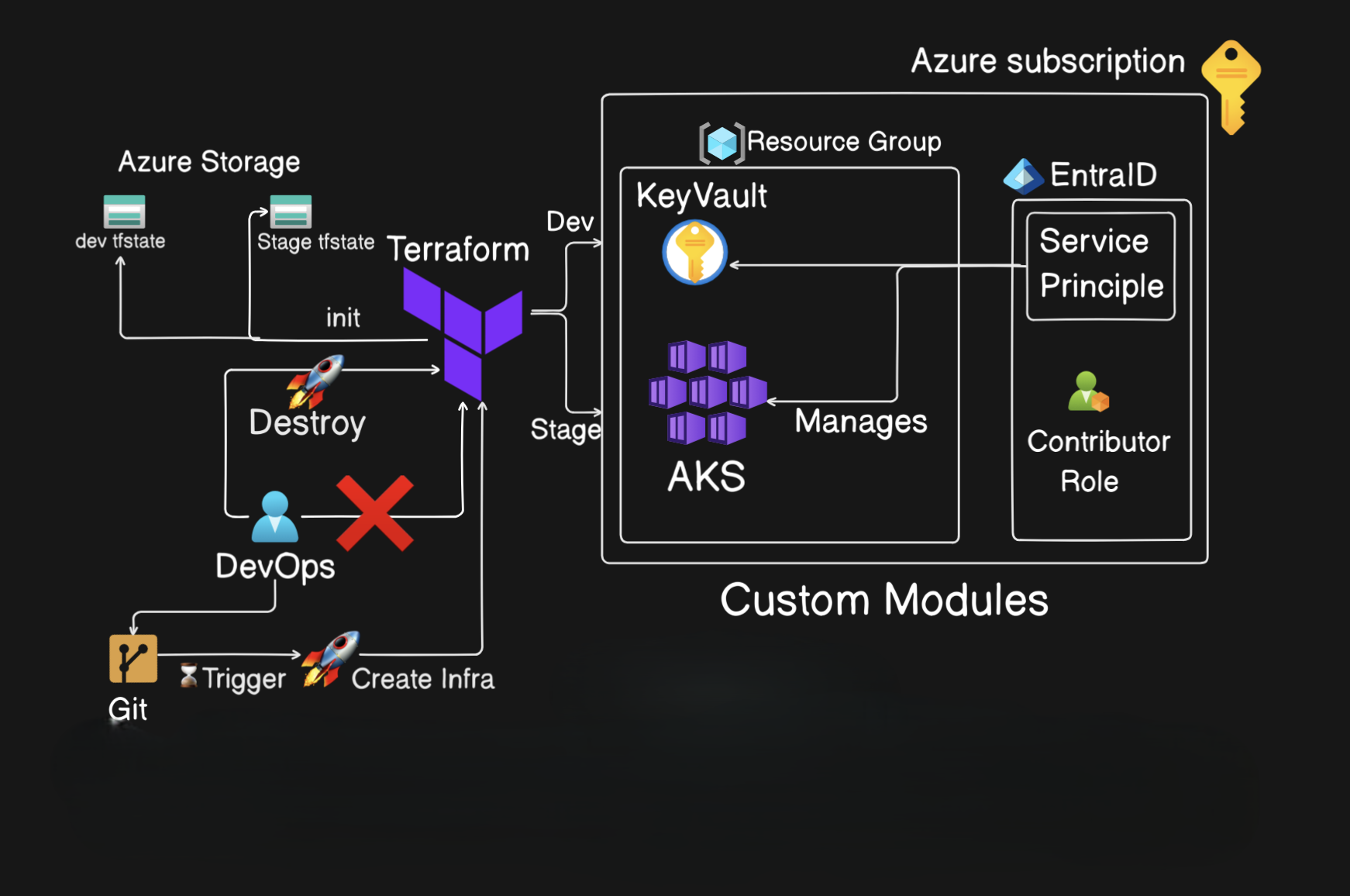

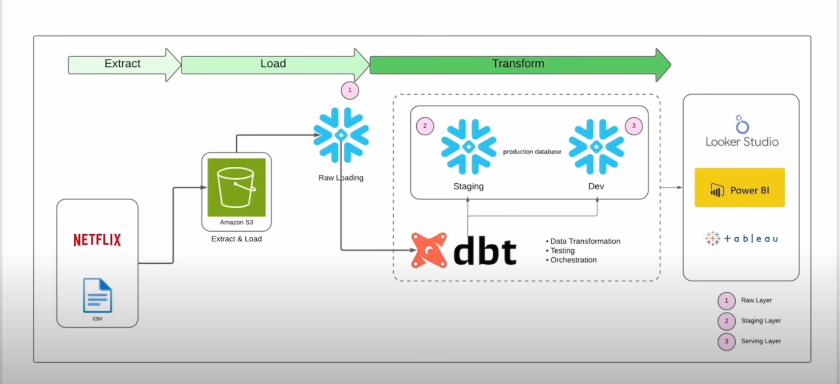

I focus on cloud-native delivery in Azure, using modern tooling like Databricks, Airflow, Kafka, dbt, and Snowflake to ship production-grade pipelines with clean models and clear documentation.